AI Problem Framing for AI Practitioners

Rajiv Shah

ML Engineer with 10+ years of experience

Most AI fails from bad framing, not bad models. Learn to fix that.

AI Problem Framing is to AI teams what System Design is to software engineers and Product Sense is to PMs. Whether you're building, evaluating, or leading AI work, this is the foundational thinking skill that separates results from rework.

--

🚨 Pilot cohort: February 16 – March 20, 2026 - limited to 25 students. 🚨

This is the first run of the course, that means:

• Never before published material, you see it first!

• Smaller cohort = more direct access to me

• Pilot pricing won't last

---

What you get:

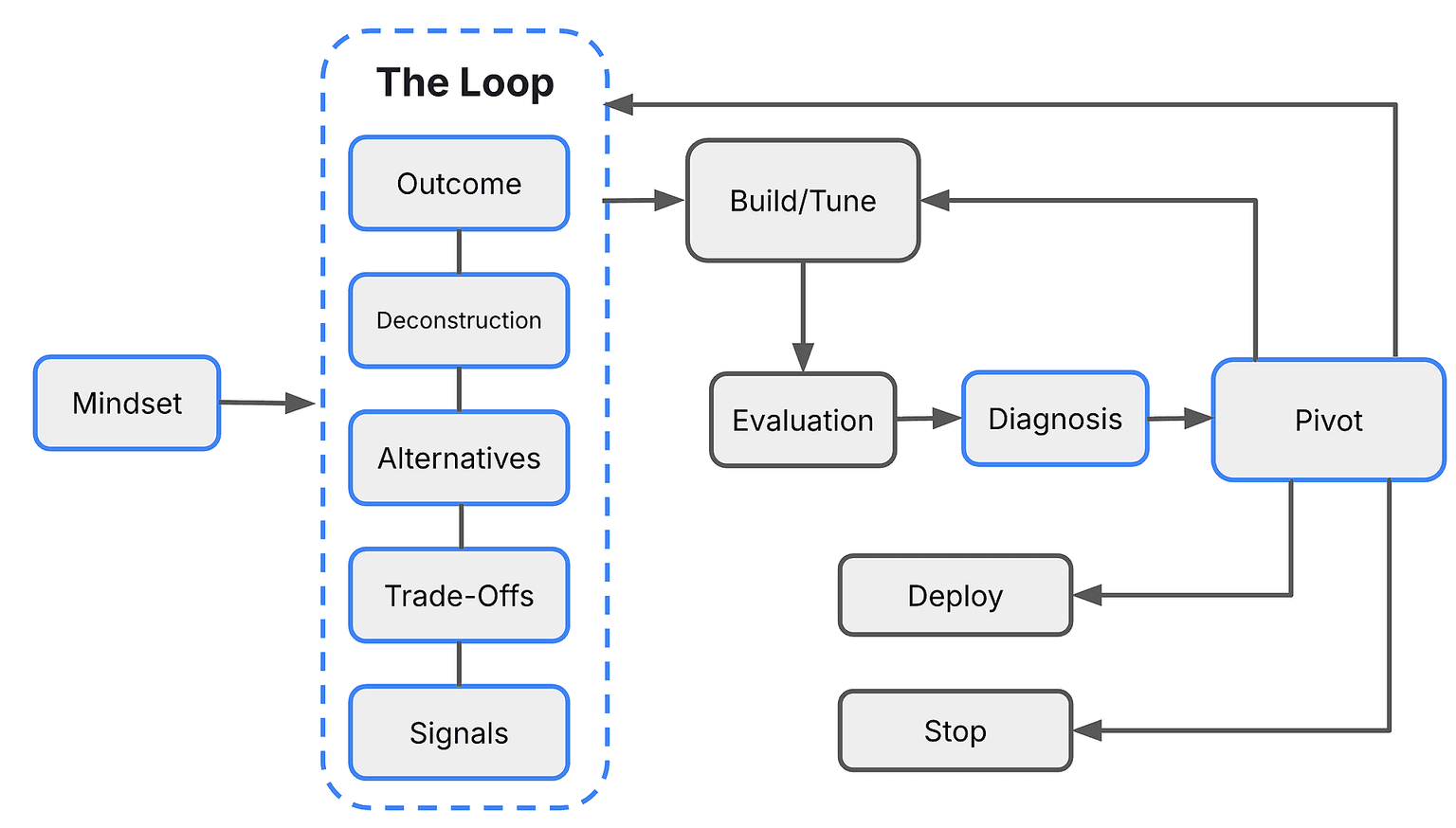

🔄 The Loop, a 5-step framework you'll use to think through every AI project

🧑🏫 Live sessions and office hours where you can bring your real problems

📊 Access to 200+ case studies, the largest collection of AI reframing examples

📋 Production-ready checklists for RAG, Forecasting, GenAI

🎥 Lifetime access to all recordings

---

This course is for you if:

• Your AI works in demos but fails in production

• You've spent months on a model only to realize you solved the wrong problem

• You lead AI work but came from engineering, product, or PM

You'll learn from 200+ AI failures in 5 weeks. You gain years of experience and recognize the scars.

What you’ll learn

How to think through AI problems end-to-end: scoping, debugging, and knowing when to pivot.

Use the Loop: a 5-step framework (Outcome, Deconstruction, Alternatives, Trade-offs, Signals) for your AI solutions

Learn to ask the questions that reveal whether you're solving the right problem.

Recognize the signals that tell you what's actually broken.

Learn whether to fix the data, fix the architecture, or fix the framing, and how to tell the difference.

Shift from executor to AI Architect: question requirements before building them.

Push back with evidence: "I know you want a chatbot, but here's why search is better."

Study real failures so you can spot the warning signs before they become expensive.

Set realistic expectations and catch bad framings before the team spends months on them.

Translate AI trade-offs into business terms stakeholders actually understand.

Learn directly from Rajiv

Rajiv Shah

Enterprise AI veteran: Shipping AI to production, with clarity and humor

Who this course is for

Engineers who can build AI but want to master outcome engineering: knowing what to build, not just how

Building RAG, agents, or ML models? Let's get your demos into production! Learn the thinking that makes the difference.

Managers who need to evaluate AI/ML proposals, set realistic milestones, and know when to escalate

Prerequisites

You understand what AI/ML is at a conceptual level

We focus on framing and strategy, not explaining what models or training means. Basic vocabulary lets us go deeper faster.

You've worked on or led AI projects in some capacity

Real experience gives you context. The frameworks click when you can map them to projects you've actually built or managed.

No coding during the course; frameworks for thinking, not tutorials

This is about decision-making, not implementation. You'll learn what to build and why, not how to code it.

Course syllabus

9 live sessions • 62 lessons • 11 projects

Week 1

Lesson 1: Sharpening Your Approach to AI Problems - Developing the Mindset

Lesson 2: AI Alternatives - What is Possible with AI

Feb

17

Optional: Exercises + Live Office Hours 1

Feb

19

Optional: Exercises + Live Office Hours 2

Week 2

Lesson 3: The Loop - A Framework for AI Problem Framing

Feb

23

Optional: Exercises + Live Office Hours 3

Feb

26

Optional: Live Office Hours 4

Schedule

Live sessions

2 hrs / week

Combination of live sessions covering material and office hours for your questions. Depending on the time zones of the final cohort, I may adjust some of these time to cover as many people as possible.

Tue, Feb 17

7:00 PM—8:00 PM (UTC)

Thu, Feb 19

9:00 PM—10:00 PM (UTC)

Mon, Feb 23

7:00 PM—8:00 PM (UTC)

Projects

1 hr / week

Optional exercises you can do to dig deeper and master the the content

Recorded Lessons

1-2 hrs / week

Lectures are recorded for your convenience

Voices from the field

"The key question for enterprise AI is: what problem are you actually solving?" -- Alexandru Vesa, MLOps Engineer

"Tools go in and out of style quickly, but fundamental approaches to problem solving should last a bit longer." -- Chip Huyen, Stanford ML Systems Design

"The future of work is all of us becoming managers of AI." -- Richard Socher, former Chief Scientist at Salesforce

Course Map

From Mindset through The Loop to Build, Evaluate, and the Pivot decision in one view.

What You will Learn

The Strategy & Mindset

- The 5-Step Loop: A structured engineering lifecycle for deconstructing and reframing complex AI problems before writing code.

- 18 AI Architectures: A complete "Menu" of options—from Ranking and Forecasting to Agents and RAG—so you never fall into the Maslow's (Hammer) trap.

- 4 Framing Lenses: Mental models to break System 1 pattern-matching and force rigorous architectural thinking.

The Diagnostics

- 8 Sanity Checks: The "Integrity Suite" of tests (like Random Labels and Time Splits) to ensure your project is working.

- 8 Input Diagnoses: How to identify common data input failures.

- 6 Pivot Signals: A "Smell Test" for recognizing when an architecture has hit a dead end (and exactly what to do about it).

The Toolkit

- 5 Canvases & Checklists: Course-exclusive worksheets for running strategy sessions, including the Atomic Unit Canvas, Trade-off Radar, and Signals Dashboard.

- 3 Decision Trees: Logic maps for routing diagnosis to action (e.g., "If Retrieval fails → Fix Chunking, not Prompting").

The Reference Library

- 200+ Case Studies: A searchable database of real-world pivots (Netflix, Uber, Stripe) mapped to the 18 architectures in the course.

Participation & Code of Conduct

Participation Expectations

This is a practitioner-focused cohort built on trust and discussion.

Participants are expected to engage respectfully and professionally.

Disruptive behavior, bad-faith participation, or misuse of course materials may result in removal.

Content Use Policy

Course content, discussions, slides, and materials are for personal educational use only.

Recording, transcribing, scraping, or redistributing any portion of the course is not permitted.

This includes sharing with employers or third parties.

Violation may result in removal from the course.