Evals for Voice AI: Learnings from Google Evals Team

Hosted by Ravin Kumar

In this video

What you'll learn

Multimodal Evals Challenges

Gain practical insights for building, benchmarking, or researching evals in voice and other multimodal contexts

Design Voice AI Evals

Learn from practical experience of evals for real-world voice-based conversational AI product like NotebookLM

Evaluation Driven Development

Use evals for real-world conversational quality, setting evals metrics and guardrails in voice systems.

Why this topic matters

How to do AI evals for voice-based products? How do evaluation frameworks shape the development, reliability, and user experience of emerging voice AI systems. Using Google’s NotebookLM as a case study, we’ll dive into practical insights on designing evals that capture real-world conversational quality, reasoning depth, and responsiveness in multimodal and voice-based contexts.

You'll learn from

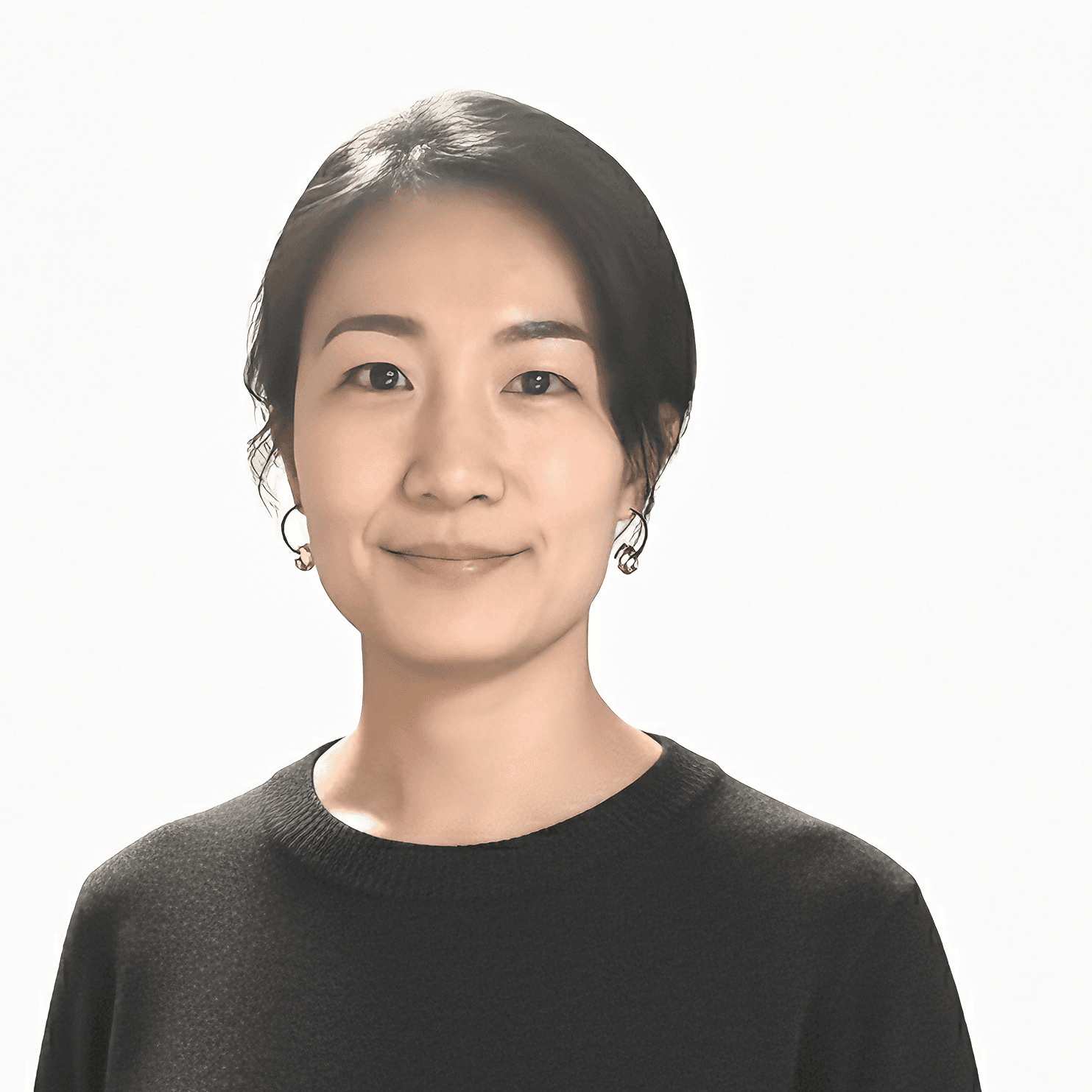

Ravin Kumar

Sr. Researcher at Google Deepmind

Ravin is Sr. Researcher at Google Deepmind. He worked on LLMs, Trust and Safety, and LLM Trust and Safety.

Ravin has over a decade of experience in applying math, statistics, and computation to solve challenging problems in various domains, such as aerospace, food, and open source.

Ravin is also a core contributor to PyMC, the leading platform for statistical data science. He is passionate about making math accessible and useful for everyone. I have written a book and published several papers on this topic.

Go deeper with a course

AI Evals and Analytics Playbook

Stella Liu and Amy Chen

Head of AI Applied Science. Cofounder at AI Evals & Analytics

.png&w=1536&q=75)