AI Evals and Analytics Playbook

Stella Liu

Head of AI Applied Science

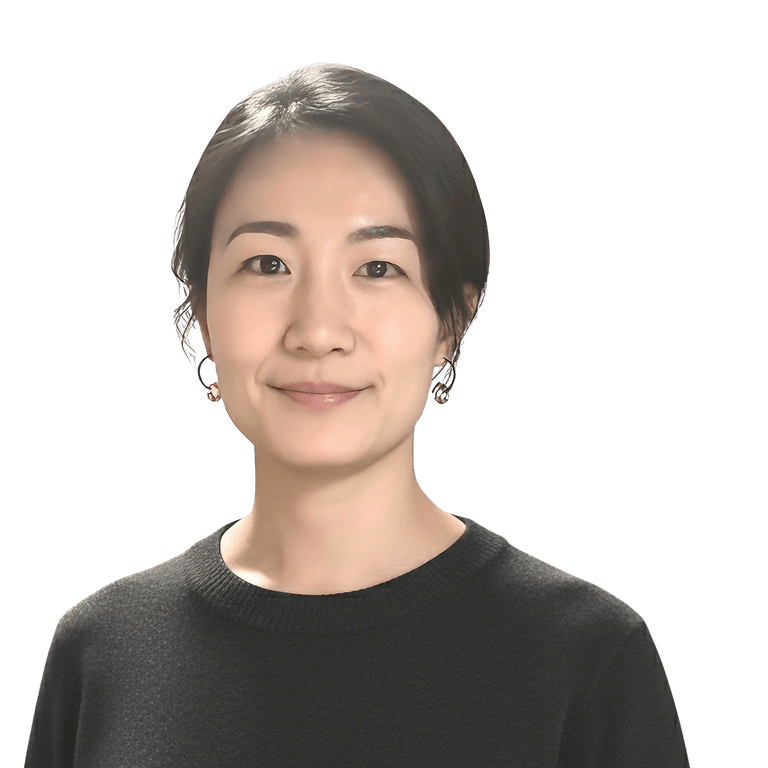

Amy Chen

Cofounder, AI Evals & Analytics

Build Effective AI Evals to Drive Product Success

From pre-release testing to in-production monitoring and business impact measurements

-

Over 85% of AI projects fail to deliver real value or reach production.

Building AI in 2026 is easy. Building AI that is reliable, safe, and trusted is not.

Evaluation-Driven Development (EDD) is becoming essential. It enables teams to iterate quickly, make informed decisions, ship with confidence, and earn user trust and organizational credibility.

But evaluation alone is not enough. AI Product Analytics completes the picture by measuring real-world impact once your AI product meets real users.

-

🧰 About This Course

❌ This is NOT a coding course, because you don’t need one.

🧠 What you need is a mental model shift.

🧭 We guide you through that shift.

In this course, we share framework and playbook we use to evaluate and monitor thousands of real-world AI applications.

By the end, you’ll have a clear, organization-ready AI evaluation and analytics plan to ship, iterate, and stay accountable in the real world.

-

AI evals is a fast-moving space. Enrolled students receive first-hand insights and ongoing updates on the latest developments, even after the cohort ends.

What you’ll learn

Learn how to evaluate, ship, and monitor AI products that perform reliably in production.

4 live sessions (2 hours each) over 2 weeks, focused on real product decisions

Develop a mental model shift from traditional software to AI products

Build your AI Evals & Analytics playbook for your current product use case

Define clear ownership for rubrics, metrics validation, and go/no-go decisions

Align Product, Data, Legal, Trust & Safety, and SMEs without slowing delivery

Establish evaluation as a decision gate, not a bottleneck

Run early qualitative evaluations and build quantitative evaluation pipelines

Apply Evaluation-Driven Development (EDD) to guide product iteration during development

Choose the right methodologies, sample sizes, and success criteria

Set up leading indicators (retry rates, confidence scores) and lagging metrics (CSAT, cost)

Build escalation procedures and run structured post-launch reviews at 15, 30, and 60 days

Use analytics to inform iteration, rollback, and roadmap decisions

Who this course is for

Product Leaders

Building AI products and need a clear playbook for evaluation frameworks, success metrics, and shipping with confidence.

Data Leaders

Redefining team scope and structure in the AI era, aligning evaluation, analytics, and accountability.

Seasoned Data Scientists

Leveling up with AI product skills: learn AI evals, LLM-as-a-judge, and design AI-specific performance metrics.

What's included

Live sessions

Learn directly from Stella Liu & Amy Chen in a real-time, interactive format.

Your First AI Evals and Analytics Playbook

Create your first AI Evals playbook and apply it on your current projects.

Glossary Sheet

Master the terminology with clear definitions and practical examples for every key concept in AI Evals.

Lifetime access

Go back to course content and recordings whenever you need to.

Community of peers

Stay accountable and share insights with like-minded professionals.

Certificate of completion

Share your new skills with your employer or on LinkedIn.

Maven Guarantee

This course is backed by the Maven Guarantee. Students are eligible for a full refund up until the halfway point of the course.

Course syllabus

4 live sessions • 10 lessons

Week 1

Getting Ready for Session 1

Feb

21

Session 1: AI Evaluations - What & Why

Getting Ready for Session 2

Feb

22

Session 2: Evaluate AI Products before release

Week 2

Getting Ready for Session 3

Feb

28

Session 3: Evals Tooling/Vendors & Experiment

(Recorded Lesson) AI Evals Vendors

(Recorded Lesson) Test Set Generation & More

Getting Ready for Session 4

Mar

1

Session 4: Monitoring & Analytics

(Recorded Lesson) AI Evals Open Source Frameworks

Free resources

Schedule

Live sessions

4 hrs / week

Sat, Feb 21

7:00 PM—9:00 PM (UTC)

Sun, Feb 22

7:00 PM—9:00 PM (UTC)

Sat, Feb 28

7:00 PM—9:00 PM (UTC)

Sun, Mar 1

7:00 PM—9:00 PM (UTC)

Projects

1 hr / week

Async content

1 hr / week