AI Systems Design & Inference Engineering

Abi Aryan

ML Research Engineer and Book Author

This course is popular

4 people enrolled last week.

From AI Engineer to AI Systems Engineer

If you’re an AI engineer who can already prompt or fine-tune models but you’ve never been able to answer questions like:

- Why is my 70B model using 120 GB of VRAM and still slow?

- How do I serve 500 concurrent users on 4xH100s without going broke?

- What actually happens inside FlashAttention / PagedAttention / tensor parallelism?

- How do I save my company millions running open models in production?

… then this is the course you’ve been waiting for.

What you’ll learn

Most engineers load models with Hugging Face then serve something basic and get stuck on cost, latency, or scale. In this course, we will

Learn to serve a tiny model (e.g. Phi-3-mini or TinyLlama) on a single consumer GPU in <30 minutes.

You'll learn concrete request‑to‑token mental model before hardware and optimization detail.

Use real tools to see exactly where time/memory is wasted.

We will write an LLM from scratch and you'll learn to calculate exactly how many tokens, bytes and VRAM your model will take.

You'll learn how to optimize for compute versus memory.

We will implement compute management tricks and then memory management optimizations incl KV Cache, Quantization etc.

You'll learn exactly what works, when and why.

Scaling models doesn't just mean throwing more compute at the problem

You'll learn system architecture design for distributed systems, orchestration and batching.

We will implement distributed inferencing for 70B–405B models.

By the end of the course, you will learn how to choose between vLLM, SGLang, TensorRT-LLM, LLM-d etc for different applications

You will design your own inference engine

Learn to implement stateful tool-calling and serving high-concurrency APIs

1. Zach Mueller, Head of Dev Rel @ Lambda, ex-Huggging Face

2. Suman Debnath, Technical Lead ML @ Anyscale

3. Paul Iusztin, AI Engineer and Author (LLM Engineer's Handbook)

Learn directly from Abi

Abi Aryan

Founder and Research Engineering Lead @ Abide AI

Who this course is for

AI engineers, ML infrastructure engineers, and backend developers who own inference cost and need to 10–50× optimize it.

Founders & engineers building LLM apps who are tired of burning money on OpenAI

AI engineers who don't understand system design for building reliable applications

Anyone on job market for Inferencing/Solns Architect role

Prerequisites

You have shipped at least one non-trivial Python project

We cannot teach you the basics of Python code.

You can already load and run an LLM using HF Transformers

If you have never touched AI models, this course is not for you.

Comfortable with basic terminal/SSH, git, yaml & json files

We can help with Docker and Kubernetes, don't worry.

What's included

Live sessions

Learn directly from Abi Aryan in a real-time, interactive format.

1400 USD in compute credits

A total of 1400 USD in compute credits from Modal, Anyscale and Lambda to work on the course exercises

Lifetime access

Anyone who signs up for the course will have lifetime access to the course and to the future cohort recording/materials too as I keep updating it.

Resume Review

We will have 1-on-1 resume review if you are currently on the job market or looking for a career transition

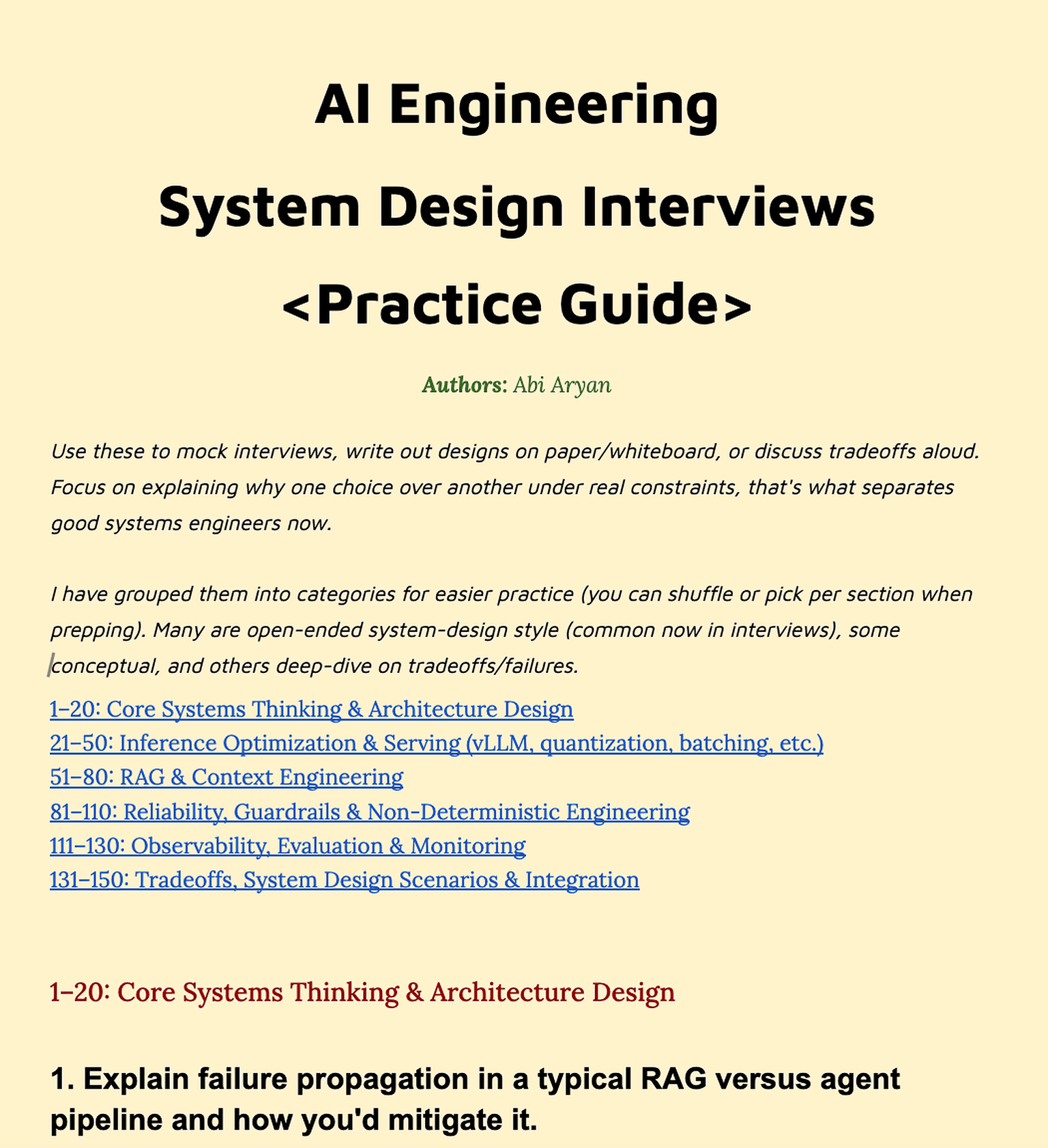

78-page system design interview guide

It covers 150 practice questions covering interview questions for 1. Systems Architecture Design 2. Inference Optimization & Serving 3. RAG & Context Engineering 4. Reliability and Guardrails 5. Observability, Evaluation & Monitoring, and finally 6. Tradeoffs, Scenarios & Integrations

Community of peers

A discord channel to stay connected with peers.

Guest Speakers

Learn from industry professionals and their experiences.

Certificate of completion

Share your new skills with your employer or on LinkedIn.

Maven Guarantee

This course is backed by the Maven Guarantee. Students are eligible for a full refund through the second week of the course.

Free resources

Schedule

Live sessions

1-2 hrs / week

We will try our best to accomodate different timezones.

Sat, Feb 21

4:00 PM—6:00 PM (UTC)

Weekly Project

1-3 hrs / week

Abi will be available to help you in case you get stuck.

Quizzes and Async content

2-4 hrs / week

This is optional but incredibly helpful for further reading and reference.

Frequently asked questions

$1,999

USD

12 hours left to enroll