Evaluate AI agents with Confidence

Hosted by Mahesh Yadav

In this video

What you'll learn

Assess LLM Suitability for Your Agentic AI

Design a Manual Evaluation Framework for AI Agents

Automate AI Evaluation with Observability & LLM Judges

Why this topic matters

You'll learn from

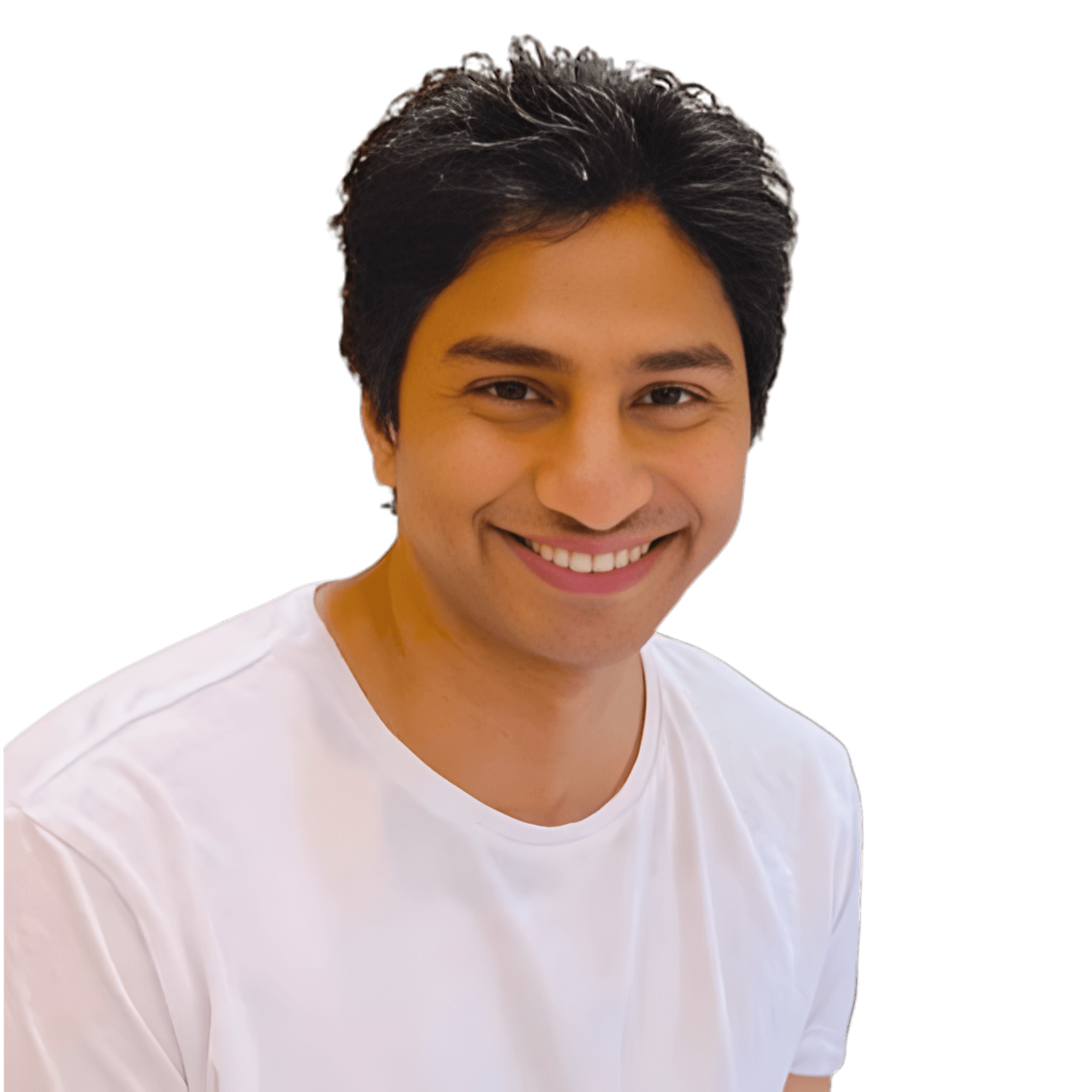

Mahesh Yadav

Gen AI product lead at Google, Former at Meta AI, AWS AI, 10k+ AI PM Students

Mahesh has 20 years of experience in building products at Meta, Microsoft and AWS AI teams. Mahesh has worked in all layers of the AI stack from AI chips to LLM and has a deep understanding of how using AI agents companies ship value to customers. His work on AI has been featured in the Nvidia GTC conference, Microsoft Build, and Meta blogs.

His mentorship has helped various students in building Real time products & Career in Agentic AI PM space.

Previously at

Go deeper with a course

Keep exploring

.jpg&w=1536&q=75)